**This is part of our series highlighting startups who share our mission of trying to make people’s lives just a little easier**

Machine learning sounds pretty simple, in theory. For example, if you want to create a tool that identifies faces in a photo, you input tons of pictures of people into the machine learning tool and, after a while, the software will learn what a face looks like in a photo and how to identify it.

But it’s more complicated than it seems. There’s actually a whole load of custom code, different software, and advanced data analytics that go into the recipe for a successful piece of machine learning.

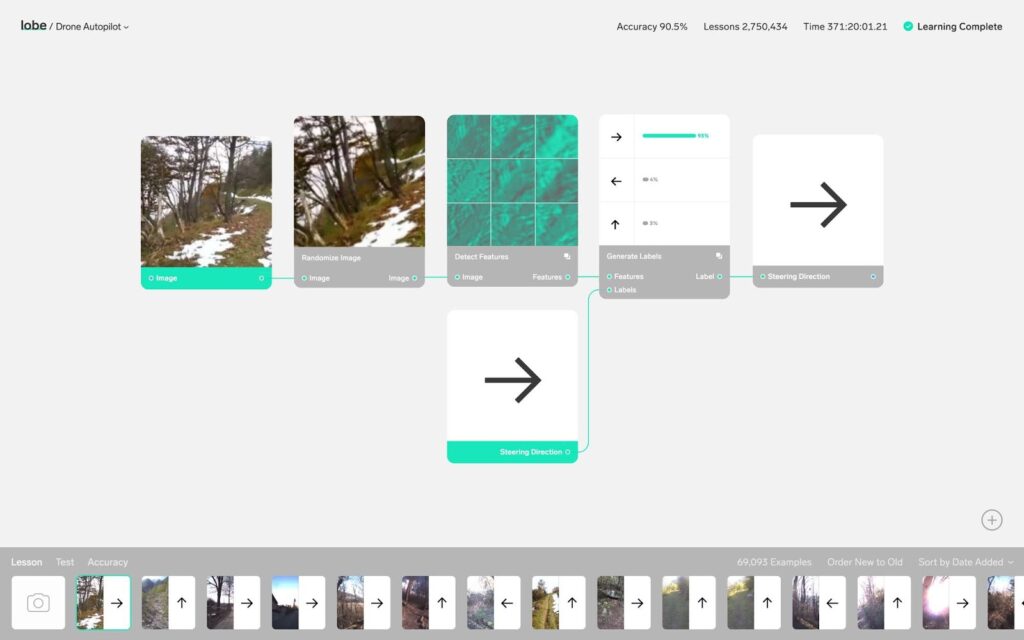

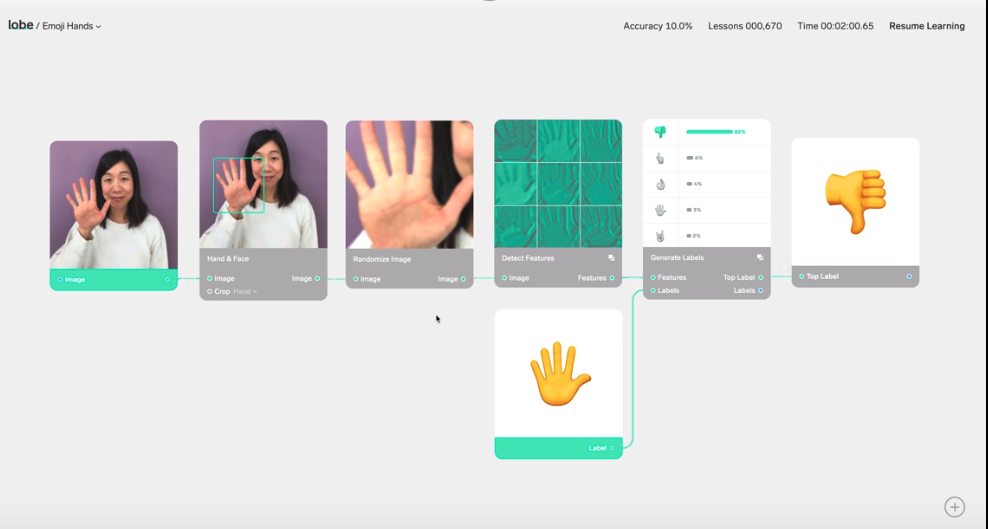

Enter Lobe, a new startup that aims to make machine learning as simple as clicking together a couple of LEGO bricks. It got its name from the platform’s drag-and-drop visual coding interface (known as “lobes”) which manifest as card-based views that can be moved around, put together, and built upon.

Let’s face it, machine learning doesn’t sound like a simple concept you could chat about with your granny over tea. It’s a pretty complex thing that has taken years to develop and even longer for people to master the art of it.

Which leads most people to thinking that it’s not for them. But if you’ve had ideas in the past (and who hasn’t?!), they could probably benefit from machine learning in some way.

To get things started on Lobe, you simply have to add a load of images or sound files into the website and the tool will start processing them immediately to learn what it can from the files you’ve uploaded.

The goal? For any old non-techie person to make their wildest ideas a reality.

Mike Matas, founder of Lobe, says: “There’s been a lot of situations where people have kind of thought about AI and have these cool ideas, but they can’t execute them. So those ideas just get shed, unless you have access to an AI team.”

According to a recent survey of 2,500 developers, 28% of respondents named AI and machine learning as the technologies they were backing the most in 2018. Even non-techies are somewhat enamored with the idea.

But for the tech-minded lot, there are plenty of tools already out there in the developer world; tools that require the inputting of code and the knowledge of different software languages to build modules upon modules. Basically, they are exclusive in that only people with the technical know-how can use them, whereas Lobe aims to offer an inclusive tool for everyone that has an idea.

“You need to know how to piece these things together, there are lots of things you need to download,” says Matas of the tools that are “exclusive” to developers. “I’m one of those people who if I have to do a lot of work, download a bunch of frameworks, I just give up. So as a UI designer I saw the opportunity to take something that’s really complicated and reframe it in a way that’s understandable.”

How Lobe Works

The tech industry has fully embraced AI and machine learning, welcoming it with open arms and metaphorically feeding it up like the guest of honor at a dinner party. In the survey mentioned above, 73% of respondents said they were interested in learning about machine learning platforms, despite only 17% having worked with AI technologies in 2017.

The team behind Lobe have tapped into this idea that people want machine learning technology, but still find it a pretty confusing concept.

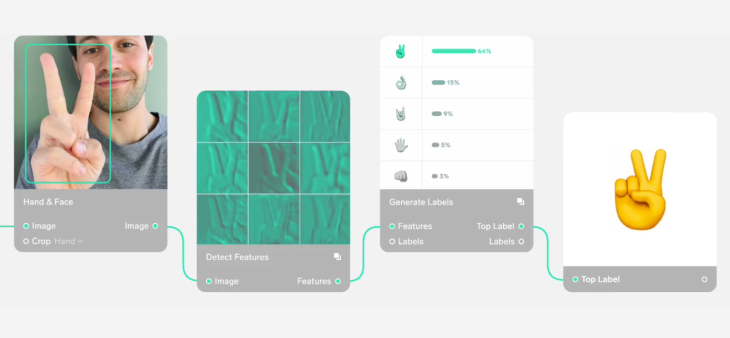

By taking the complicated, code-riddled parts of machine learning (we’re talking feature extraction and labelling here) and turning them into a simple, easy-to-use visual interface, Lobe basically offers machine learning for dummies.

Take the example below. Lobe’s platform allows users to create applications that can read hand gestures in real photos and match them up with signs in emojis without having to go anywhere near a single piece of code.

This makes the possibilities endless: a new dimension has opened up where people can create and build their own apps without having the advanced technical knowledge to do so.

Despite the potential it has, Lobe is still pretty basic in its execution and interface.

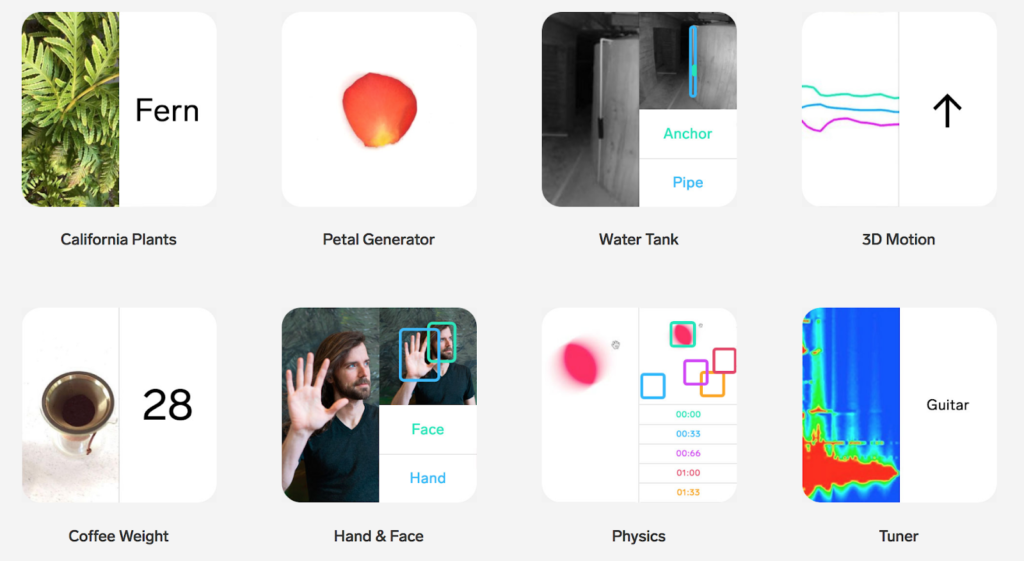

It relies solely on images and sensors. For example, existing blueprints include the ability to identify plants from images and create a tuner for different string instruments.

“As a UI designer when I first started, I made everything in Photoshop,” says Matas. “Everything I designed was through a static interface. So every solution was a button on the screen. Then I learned UX prototyping tools. With them, we could do iterative prototyping, and suddenly we could solve problems with motions, interaction, and gestures. And you get things like the iPhone X home swipe.”

What This Means for the Future of AI

For Matas, AI is bringing the next influx of user interfaces with it.

He compares the early days of machine learning with those of PCs, when only computer scientists and engineers could operate the new machines: “they were the only people able to use them, so they were the only people able to come up with ideas about how to use them,” he says.

It wasn’t until later in the 80s when computers became more of a creative tool for all, and that was predominantly due to vast improvements in the user interface making them easier for non-techies to get behind.

The aim is to bring machine learning to the masses and break it free from the tech world in some way. “People outside the data science community are going to think about how to apply this to their field,” Matas says. Unlike before, where they needed an AI expert to stand in and help build the learning systems, they will now be able to create a working model themselves.

For a long time, AI was the future of the tech world, but with tools like Lobe popping up, it now seems that machine learning and deep learning capabilities are the future for all industries.